In warehouses, call centers, and other sectors, intelligent machines are managing humans, and they’re making work more stressful, grueling, and dangerous

On conference stages and at campaign rallies, tech executives and politicians warn of a looming automation crisis — one where workers are gradually, then all at once, replaced by intelligent machines. But their warnings mask the fact that an automation crisis has already arrived. The robots are here, they’re working in management, and they’re grinding workers into the ground.

The robots are watching over hotel housekeepers, telling them which room to clean and tracking how quickly they do it. They’re managing software developers, monitoring their clicks and scrolls and docking their pay if they work too slowly. They’re listening to call center workers, telling them what to say, how to say it, and keeping them constantly, maximally busy. While we’ve been watching the horizon for the self-driving trucks, perpetually five years away, the robots arrived in the form of the supervisor, the foreman, the middle manager.

These automated systems can detect inefficiencies that a human manager never would — a moment’s downtime between calls, a habit of lingering at the coffee machine after finishing a task, a new route that, if all goes perfectly, could get a few more packages delivered in a day. But for workers, what look like inefficiencies to an algorithm were their last reserves of respite and autonomy, and as these little breaks and minor freedoms get optimized out, their jobs are becoming more intense, stressful, and dangerous. Over the last several months, I’ve spoken with more than 20 workers in six countries. For many of them, their greatest fear isn’t that robots might come for their jobs: it’s that robots have already become their boss.

In few sectors are the perils of automated management more apparent than at Amazon. Almost every aspect of management at the company’s warehouses is directed by software, from when people work to how fast they work to when they get fired for falling behind. Every worker has a “rate,” a certain number of items they have to process per hour, and if they fail to meet it, they can be automatically fired.

“IT’S LIKE LEAVING YOUR HOUSE AND JUST RUNNING AND NOT STOPPING FOR ANYTHING FOR 10 STRAIGHT HOURS, JUST RUNNING.”

When Jake* started working at a Florida warehouse, he was surprised by how few supervisors there were: just two or three managing a workforce of more than 300. “Management was completely automated,” he said. One supervisor would walk the floor, laptop in hand, telling workers to speed up when their rate dropped. (Amazon said its system notifies managers to talk to workers about their performance, and that all final decisions on personnel matters, including terminations, are made by supervisors.)

Jake, who asked to use a pseudonym out of fear of retribution, was a “rebinner.” His job was to take an item off a conveyor belt, press a button, place the item in whatever cubby a monitor told him to, press another button, and repeat. He likened it to doing a twisting lunge every 10 seconds, nonstop, though he was encouraged to move even faster by a giant leaderboard, featuring a cartoon sprinting man, that showed the rates of the 10 fastest workers in real time. A manager would sometimes keep up a sports announcer patter over the intercom — “In third place for the first half, we have Bob at 697 units per hour,” Jake recalled. Top performers got an Amazon currency they could redeem for Amazon Echos and company T-shirts. Low performers got fired.

“You’re not stopping,” Jake said. “You are literally not stopping. It’s like leaving your house and just running and not stopping for anything for 10 straight hours, just running.”

After several months, he felt a burning in his back. A supervisor sometimes told him to bend his knees more when lifting. When Jake did this his rate dropped, and another supervisor would tell him to speed up. “You’ve got to be kidding me. Go faster?” he recalled saying. “If I go faster, I’m going to have a heart attack and fall on the floor.” Finally, his back gave out completely. He was diagnosed with two damaged discs and had to go on disability. The rate, he said, was “100 percent” responsible for his injury.

Every Amazon worker I’ve spoken to said it’s the automatically enforced pace of work, rather than the physical difficulty of the work itself, that makes the job so grueling. Any slack is perpetually being optimized out of the system, and with it any opportunity to rest or recover. A worker on the West Coast told me about a new device that shines a spotlight on the item he’s supposed to pick, allowing Amazon to further accelerate the rate and get rid of what the worker described as “micro rests” stolen in the moment it took to look for the next item on the shelf.

People can’t sustain this level of intense work without breaking down. Last year, ProPublica, BuzzFeed, and others published investigations about Amazon delivery drivers careening into vehicles and pedestrians as they attempted to complete their demanding routes, which are algorithmically generated and monitored via an app on drivers’ phones. In November, Reveal analyzed documents from 23 Amazon warehouses and found that almost 10 percent of full-time workers sustained serious injuries in 2018, more than twice the national average for similar work. Multiple Amazon workers have told me that repetitive stress injuries are epidemic but rarely reported. (An Amazon spokesperson said the company takes worker safety seriously, has medical staff on-site, and encourages workers to report all injuries.) Backaches, knee pain, and other symptoms of constant strain are common enough for Amazon to install painkiller vending machines in its warehouses.

The unrelenting stress takes a toll of its own. Jake recalled yelling at co-workers to move faster, only to wonder what had come over him and apologize. By the end of his shift, he would be so drained that he would go straight to sleep in his car in the warehouse parking lot before making the commute home. “A lot of people did that,” he said. “They would just lay back in their car and fall asleep.” A worker in Minnesota said that the job had been algorithmically intensified to the point that it called for rethinking long-standing labor regulations. “The concept of a 40-hour work week was you work eight hours, you sleep eight hours, and you have eight hours for whatever you want to do,” he said. “But [what] if you come home from work and you just go straight to sleep and you sleep for 16 hours, or the day after your work week, the whole day you feel hungover, you can’t focus on things, you just feel like shit, you lose time outside of work because of the aftereffects of work and the stressful, strenuous conditions?”

“WE ARE NOT ROBOTS.”

Workers inevitably burn out, but because each task is minutely dictated by machine, they are easily replaced. Jake estimated he was hired along with 75 people, but that he was the only one remaining when his back finally gave out, and most had been turned over twice. “You’re just a number, they can replace you with anybody off the street in two seconds,” he said. “They don’t need any skills. They don’t need anything. All they have to do is work real fast.”

There are robots of the ostensibly job-stealing variety in Amazon warehouses, but they’re not the kind that worry most workers. In 2014, Amazon started deploying shelf-carrying robots, which automated the job of walking through the warehouse to retrieve goods. The robots were so efficient that more humans were needed in other roles to keep up, Amazon built more facilities, and the company now employs almost three times the number of full-time warehouse workers it did when the robots came online. But the robots did change the nature of the work: rather than walking around the warehouse, workers stood in cages removing items from the shelves the robots brought them. Employees say it is one of the fastest-paced and most grueling roles in the warehouse. Reveal found that injuries were more common in warehouses with the robots, which makes sense because it’s the pace that’s the problem, and the machines that most concern workers are the ones that enforce it.

Last year saw a wave of worker protests at Amazon facilities. Almost all of them were sparked by automated management leaving no space for basic human needs. In California, a worker was automatically fired after she overdrew her quota of unpaid time off by a single hour following a death in her family. (She was rehired after her co-workers submitted a petition.) In Minnesota, workers walked off the job to protest the accelerating rate, which they said was causing injuries and leaving no time for bathroom breaks or religious observance. To satisfy the machine, workers felt they were forced to become machines themselves. Their chant: “We are not robots.”

/cdn.vox-cdn.com/uploads/chorus_asset/file/19749741/VRG_Automation_pagebreak_2.0.png)

/cdn.vox-cdn.com/uploads/chorus_asset/file/19749741/VRG_Automation_pagebreak_2.0.png)

/cdn.vox-cdn.com/uploads/chorus_asset/file/19749741/VRG_Automation_pagebreak_2.0.png)

Every industrial revolution is as much a story of how we organize work as it is of technological invention. Steam engines and stopwatches had been around for decades before Frederick Taylor, the original optimizer, used them to develop the modern factory. Working in a late-19th century steel mill, he simplified and standardized each role and wrote detailed instructions on notecards; he timed each task to the second and set an optimal rate. In doing so, he broke the power skilled artisans held over the pace of production and began an era of industrial growth, and also one of exhausting, repetitive, and dangerously accelerating work.

It was Henry Ford who most fully demonstrated the approach’s power when he further simplified tasks and arranged them along an assembly line. The speed of the line controlled the pace of the worker and gave supervisors an easy way to see who was lagging. Laborers absolutely hated it. The work was so mindless and grueling that people quit in droves, forcing Ford to double wages. As these methods spread, workers frequently struck or slowed down to protest “speedups” — supervisors accelerating the assembly line to untenable rates.

We are in the midst of another great speedup. There are many factors behind it, but one is the digitization of the economy and the new ways of organizing work it enables. Take retail: workers no longer stand around in stores waiting for customers; with e-commerce, their roles are split. Some work in warehouses, where they fulfill orders nonstop, and others work in call centers, where they answer question after question. In both spaces, workers are subject to intense surveillance. Their every action is tracked by warehouse scanners and call center computers, which provide the data for the automated systems that keep them working at maximum capacity.

At the most basic level, automated management starts with the schedule. Scheduling algorithms have been around since the late 1990s when stores began using them to predict customer traffic and generate shifts to match it. These systems did the same thing a business owner would do when they scheduled fewer workers for slow mornings and more for the lunchtime rush, trying to maximize sales per worker hour. The software was just better at it, and it kept improving, factoring in variables like weather or nearby sporting events, until it could forecast the need for staff in 15-minute increments.

NO ONE EVER EXPERIENCES A LULL

The software is so accurate that it could be used to generate humane schedules, said Susan Lambert, a professor at the University of Chicago who studies scheduling instability. Instead, it’s often used to coordinate the minimum number of workers required to meet forecasted demand, if not slightly fewer. This isn’t even necessarily the most profitable approach, she noted, citing a study she did on the Gap: it’s just easier for companies and investors to quantify cuts to labor costs than the sales lost because customers don’t enjoy wandering around desolate stores. But if it’s bad for customers, it’s worse for workers, who must constantly race to run businesses that are perpetually understaffed.

Though they started in retail, scheduling algorithms are now ubiquitous. At the facilities where Amazon sorts goods before delivery, for example, workers are given skeleton schedules and get pinged by an app when additional hours in the warehouse become available, sometimes as little as 30 minutes before they’re needed. The result is that no one ever experiences a lull.

The emergence of cheap sensors, networks, and machine learning allowed automated management systems to take on a more detailed supervisory role — and not just in structured settings like warehouses, but wherever workers carried their devices. Gig platforms like Uber were the first to capitalize on these technologies, but delivery companies, restaurants, and other industries soon adopted their techniques.

There was no single breakthrough in automated management, but as with the stopwatch, revolutionary technology can appear mundane until it becomes the foundation for a new way of organizing work. When rate-tracking programs are tied to warehouse scanners or taxi drivers are equipped with GPS apps, it enables management at a scale and level of detail that Taylor could have only dreamed of. It would have been prohibitively expensive to employ enough managers to time each worker’s every move to a fraction of a second or ride along in every truck, but now it takes maybe one. This is why the companies that most aggressively pursue these tactics all take on a similar form: a large pool of poorly paid, easily replaced, often part-time or contract workers at the bottom; a small group of highly paid workers who design the software that manages them at the top.

“THE ROBOT APOCALYPSE IS HERE.”

This is not the industrial revolution we’ve been warned about by Elon Musk, Mark Zuckerberg, and others in Silicon Valley. They remain fixated on the specter of job-stealing AI, which is portrayed as something both fundamentally new and extraordinarily alarming — a “buzz saw,” in the words of Andrew Yang, coming for society as we know it. As apocalyptic visions go, it’s a uniquely flattering one for the tech industry, which is in the position of warning the world about its own success, sounding the alarm that it has invented forces so powerful they will render human labor obsolete forever. But in its civilization-scale abstraction, this view misses the ways technology is changing the experience of work, and with its sense of inevitability, it undermines concern for many of the same people who find themselves managed by machines today. Why get too worked up over conditions for warehouse workers, taxi drivers, content moderators, or call center representatives when everyone says those roles will be replaced by robots in a few years? Their policy proposals are as abstract as their diagnosis, basically amounting to giving people money once the robots come for them.

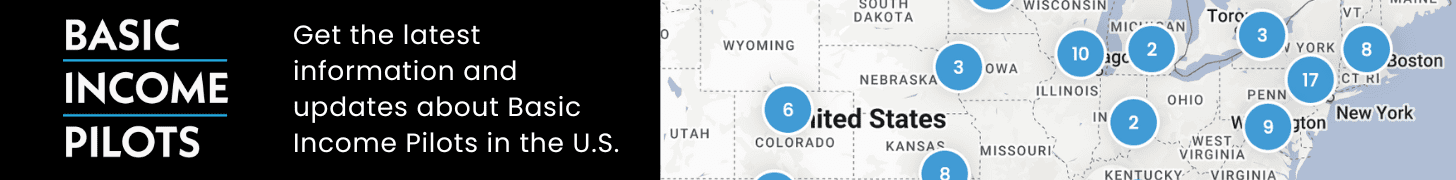

Maybe the robots will someday come for the truck drivers and everyone else, though automation’s net impact on jobs so far has been less than catastrophic. Technology will undoubtedly put people out of work, as it has in the past, and it’s worth thinking about how to provide them a safety net. But one likely scenario is that those truckers will find themselves not entirely jobless but, as an analysis by the UC Berkeley Center for Labor Research and Education suggests, riding along to help mostly autonomous vehicles navigate tricky city streets, earning lower pay in heavily monitored and newly de-skilled jobs. Or maybe they will be in call center-like offices, troubleshooting trucks remotely, their productivity tracked by an algorithm. In short, they will find themselves managed by machines, subject to forces that have been growing for years but are largely overlooked by AI fetishism.

“The robot apocalypse is here,” said Joanna Bronowicka, a researcher with the Centre for Internet and Human Rights and a former candidate for European Parliament. “It’s just that the way we’ve crafted these narratives, and unfortunately people from the left and right and people like Andrew Yang and people in Europe that talk about this topic are contributing to it, they are using a language of the future, which obscures the actual lived reality of people right now.”

This isn’t to say that the future of AI shouldn’t worry workers. In the past, for jobs to be automatically managed, they had to be broken down into tasks that could be measured by machines — the ride tracked by GPS, the item scanned in a warehouse. But machine learning is capable of parsing much less structured data, and it’s making new forms of work, from typing at a computer to conversations between people, ready for robot bosses.

/cdn.vox-cdn.com/uploads/chorus_asset/file/19749740/VRG_Automation_pagebreak_3.0.png)

/cdn.vox-cdn.com/uploads/chorus_asset/file/19749740/VRG_Automation_pagebreak_3.0.png)

Angela* worked in an insurance call center for several years before quitting in 2015. Like many call center jobs, the work was stressful: customers were often distraught, software tracked the number and length of her calls, and managers would sometimes eavesdrop on the line to evaluate how she was doing. But when she returned to the industry last year, something had changed. In addition to the usual metrics, there was a new one — emotion — and it was assessed by AI.

The software Angela encountered was from Voci, one of many companies using AI to evaluate call center workers. Angela’s other metrics were excellent, but the program consistently marked her down for negative emotions, which she found perplexing because her human managers had previously praised her empathetic manner on the phone. No one could tell her exactly why she was getting penalized, but her best guess was that the AI was interpreting her fast-paced and loud speaking style, periods of silence (a result of trying to meet a metric meant to minimize putting people on hold), and expressions of concern as negative.

“It makes me wonder if it’s privileging fake empathy, sounding really chipper and being like, ‘Oh, I’m sorry you’re dealing with that,’” said Angela, who asked to use a pseudonym out of fear of retribution. “Feeling like the only appropriate way to display emotion is the way that the computer says, it feels very limiting. It also seems to not be the best experience for the customer, because if they wanted to talk to a computer, then they would have stayed with IVR [Interactive Voice Response].”

A Voci spokesperson said the company trained its machine learning program on thousands of hours of audio that crowdsourced workers labeled as demonstrating positive or negative emotions. He acknowledged that these assessments are subjective, but said that in the aggregate they should control for variables like tone and accent. Ultimately, the spokesperson said Voci provides an analysis tool and call centers decide how to use the data it provides.

Angela’s troubles with Voci made her apprehensive about the next round of automation. Her call center was in the process of implementing software from Clarabridge that would automate parts of call evaluations still done by humans, like whether agents said the proper phrases. Her center also planned to expand its use of Cogito, which uses AI to coach workers in real time, telling them to speak more slowly or with more energy or to express empathy.

When people list jobs slated for automation, call center workers come just after truck drivers. Their jobs are repetitive, and machine learning has enabled rapid progress in speech recognition. But machine learning struggles with highly specific and unique tasks, and often people just want to talk to a human, so it’s the managerial jobs that are getting automated. Google, Amazon, and a plethora of smaller companies have announced AI systems that listen to calls and coach workers or automatically assess their performance. The company CallMiner, for example, advertises AI that rates workers’ professionalism, politeness, and empathy — which, in a demo video, it shows being measured to a fraction of a percent.

/cdn.vox-cdn.com/uploads/chorus_asset/file/19749739/VRG_Automation_spot_01.0.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/19749739/VRG_Automation_spot_01.0.jpg)

Workers say these systems are often clumsy judges of human interaction. One worker claimed they could meet their empathy metrics just by saying “sorry” a lot. Another worker at an insurance call center said that Cogito’s AI, which is supposed to tell her to express empathy when it detects a caller’s emotional distress, seemed to be triggered by tonal variation of any kind, even laughter. Her co-worker had a call pulled for review by supervisors because Cogito’s empathy alarm kept going off, but when they listened to the recording, it turned out the caller had been laughing with joy over the birth of a child. The worker, however, was busy filling out forms and only paying half-attention to the conversation, so she kept obeying the AI and saying “I’m sorry,” much to the caller’s confusion.

Cogito said its system is “highly accurate and does not frequently give false positives,” but when it does, because it augments rather than replaces humans, call center agents have the ability to use their own judgment to adapt to the situation.

As these systems spread it will be important to assess them for accuracy and bias, but they also pose a more basic question: why are so many companies trying to automate empathy to begin with? The answer has to do with the way automation itself has made work more intense.

In the past, workers might have handled a complex or emotionally fraught call mixed in with a bunch of simple, “I forgot my password” type calls, but bots now handle the easy ones. “We don’t have the easy calls to give them the mental refresh that we used to be able to give them,” said Ian Jacobs of research company Forrester. Automated systems also collect customer information and help fill out forms, which would make the job easier, except that any downtime is tracked and filled with more calls.

The worker who used Cogito, for instance, had only a minute to fill out insurance forms between calls and only 30 minutes per month for bathroom breaks and personal time, so she handled call after call from people dealing with terminal illnesses, dying relatives, miscarriages, and other traumatic events, each of which she was supposed to complete in fewer than 12 minutes, for 10 hours a day. “It makes you feel numb,” she said. Other workers spoke of chronic anxiety and insomnia, the result of days spent having emotionally raw conversations while, in the words of one worker, “your computer is standing over your shoulder and arbitrarily deciding whether you get to keep your job or not.” This form of burnout has become so common the industry has a name for it: “empathy fatigue.” Cogito, in an ebook explaining the reason for its AI, likens call center workers to trauma nurses desensitized over the course of their shift, noting that the quality of representatives’ work declines after 25 calls. The solution, the company writes, is to use AI to deliver “empathy at scale.”

It’s become conventional wisdom that interpersonal skills like empathy will be one of the roles left to humans once the robots take over, and this is often treated as an optimistic future. But call centers show how it could easily become a dark one: automation increasing the empathy demanded of workers and automated systems used to wring more empathy from them, or at least a machine-readable approximation of it. Angela, the worker struggling with Voci, worried that as AI is used to counteract the effects of dehumanizing work conditions, her work will become more dehumanizing still.

“Nobody likes calling a call center,” she said. “The fact that I can put the human touch in there, and put my own style on it and build a relationship with them and make them feel like they’re cared about is the good part of my job. It’s what gives me meaning,” she said. “But if you automate everything, you lose the flexibility to have a human connection.”

/cdn.vox-cdn.com/uploads/chorus_asset/file/19749742/VRG_Automation_pagebreak_1.0.png)

/cdn.vox-cdn.com/uploads/chorus_asset/file/19749742/VRG_Automation_pagebreak_1.0.png)

Mak Rony was working as a software engineer in Dhaka, Bangladesh, when he saw a Facebook ad for an Austin-based company called Crossover Technologies. Rony liked his current job, but the Crossover role seemed like a step up: the pay was better — $15 an hour — and the ad said he could work whenever he wanted and do it from home.

On his first day, he was told to download a program called WorkSmart. In a video, Crossover CEO Andy Tryba describes the program as a “FitBit for work.” The modern worker is constantly interacting with cloud apps, he says, and that produces huge quantities of information about how they’re spending their time — information that’s mostly thrown away. That data should be used to enhance productivity, he says. Citing Cal Newport’s popular book Deep Work, about the perils of distraction and multitasking, he says the software will enable workers to reach new levels of intense focus. Tryba displays a series of charts, like a defragmenting hard drive, showing a worker’s day going from scattered distraction to solid blocks of uninterrupted productivity.

WorkSmart did, in fact, transform Rony’s day into solid blocks of productivity because if it ever determined he wasn’t working hard enough, he didn’t get paid. The software tracked his keystrokes, mouse clicks, and the applications he was running, all to rate his productivity. He was also required to give the program access to his webcam. Every 10 minutes, the program would take three photos at random to ensure he was at his desk. If Rony wasn’t there when WorkSmart took a photo, or if it determined his work fell below a certain threshold of productivity, he wouldn’t get paid for that 10-minute interval. Another person who started with Rony refused to give the software webcam access and lost his job.

/cdn.vox-cdn.com/uploads/chorus_asset/file/19749738/VRG_Automation_spot_02.0.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/19749738/VRG_Automation_spot_02.0.jpg)

Rony soon realized that though he was working from home, his old office job had offered more freedom. There, he could step out for lunch or take a break between tasks. With Crossover, even using the bathroom in his own home required speed and strategy: he started watching for the green light of his webcam to blink before dashing down the hall to the bathroom, hoping he could finish in time before WorkSmart snapped another picture.

The metrics he was held to were extraordinarily demanding: about 35,000 lines of code per week. He eventually figured out he was expected to make somewhere around 150 keystrokes every 10 minutes, so if he paused to think and stopped typing, a 10-minute chunk of his time card would be marked “idle.” Each week, if he didn’t work 40 hours the program deemed productive, he could be fired, so he estimated he worked an extra 10 hours a week without pay to make up the time that the software invalidated. Four other current and former Crossover workers — one in Latvia, one in Poland, one in India, and another in Bangladesh — said they had to do the same.

“The first thing you’re going to lose is your social life,” Rony said. He stopped seeing friends because he was tethered to his computer, racing to meet his metrics. “I usually did not go outside often.”

As the months went on, the stress began to take a toll. He couldn’t sleep. He couldn’t listen to music while he worked because the software saw YouTube as unproductive and would dock his pay. Ironically, his work began to suffer. “If you have freedom, actual real freedom, then I can take most pressure, if needed,” he said. But working under such intense pressure day after day, he burned out and his productivity dissolved.

Tryba said the company is a platform that provides skilled workers to businesses, as well as the tools to manage them; it’s up to the businesses to decide whether and how those tools are used. He said people shouldn’t have to work additional hours without pay, and that if WorkSmart marks a timecard as idle, workers can appeal to their manager to override it. If workers need a break, he said they can hit pause and clock out. Asked why such intense monitoring was necessary, he said remote work was the future and will give workers greater flexibility, but that employers will need a way to hold workers accountable. Furthermore, the data collected will create new opportunities to coach workers on how to be more productive.

Crossover is far from the only company that has sensed an opportunity for optimization in the streams of data produced by digital workers. Microsoft has its Workplace Analytics software, which uses the “digital exhaust” produced by employees using the company’s programs to improve productivity. The field of workforce analytics is full of companies that monitor desktop activity and promise to detect idle time and reduce head count, and the optimization gets sharper-edged and more focused on individual workers the further down the income ladder you go. Staff.com’s Time Doctor, popular with outsourcing companies, monitors productivity in real time, prompts workers to stay on task if it detects they’ve become distracted or idle, and takes Crossover-style screenshots and webcam photos.

WHILE HIGHLY MEASURED AND OPTIMIZED WORKPLACES ARE MERITOCRATIC, MERITOCRACY CAN BE CARRIED TO AN EXTREME, CITING THE MOVIE ‘GATTACA’

Sam Lessin, a former Facebook VP who co-founded the company Fin, describes a plausible vision for where all this is headed. Fin started as a personal assistant app before pivoting to the software it used to monitor and manage the workers who made the assistant run. (A worker described her experience handling assistant requests as being like a call center but with heavier surveillance and tracking of idle time.) Knowledge work currently languishes in a preindustrial state, Lessin wrote in a letter at the time of the pivot, with employees often sitting idle in offices, their labor unmeasured and inefficient. The hoped-for productivity explosion from AI won’t come from replacing these workers, Lessin wrote, but from using AI to measure and optimize their productivity, just as Frederick Taylor did with factory workers. Except this will be a “cloud factory,” an AI-organized pool of knowledge workers that businesses can tap into whenever they need it, much like renting computing power from Amazon Web Services.

“The Industrial Revolution, at least in the short term, was obviously not good for workers,” Lessin acknowledged in the letter. The cloud factory will bring a wave of globalization and de-skilling. While highly measured and optimized workplaces are meritocratic, he said, meritocracy can be carried to an extreme, citing the movie Gattaca. Ultimately, these risks are outweighed by the fact that people can specialize in what they’re best at, will have to work less, and will be able to do so more flexibly.

For Rony, Crossover’s promise of flexibility proved to be an illusion. After a year, the surveillance and unrelenting pressure became too much, and he quit. “I was thinking that I lost everything,” he said. He’d given up his stable office job, lost touch with friends, and now he was worrying whether he could pay his bills. But after three months, he found another job, one in an old-fashioned office. The wage was worse, but he was happier. He had a manager who helped him when he got stuck. He had lunch breaks, rest breaks, and tea breaks. “Whenever I can go out and have some tea, fun, and head to the office, there is a place I can even sleep. There’s a lot of freedom.”

/cdn.vox-cdn.com/uploads/chorus_asset/file/19749741/VRG_Automation_pagebreak_2.0.png)

/cdn.vox-cdn.com/uploads/chorus_asset/file/19749741/VRG_Automation_pagebreak_2.0.png)

/cdn.vox-cdn.com/uploads/chorus_asset/file/19749741/VRG_Automation_pagebreak_2.0.png)

Work has always meant giving up some degree of freedom. When workers take a job, they might agree to let their boss tell them how to act, how to dress, or where to be at a certain time, and this is all viewed as normal. Employers function as what philosopher Elizabeth Anderson critiques as private governments, and people accept them exercising power in ways that would seem oppressive coming from a state because, the reasoning goes, workers are always free to quit. Workers also grant their employers wide latitude to surveil them, and that’s also seen as basically fine, eliciting concern mostly in cases where employers reach into workers’ private lives.

Automated management promises to change that calculus. While an employer might have always had the right to monitor your desktop throughout the day, it probably wouldn’t have been a good use of their time. Now such surveillance is not only easy to automate, it’s necessary to gather the data needed to optimize work. The logic can appear irresistible to a company trying to drive down costs, especially if they have a workforce large enough for marginal improvements in productivity to pay off.

But workers who tolerated the abstract threat of surveillance find it far more troubling when that data is used to dictate their every move. An Amazon worker in the Midwest described a bleak vision of the future. “We could have algorithms connected to technology that’s directly on our bodies controlling how we work,” he said. “Right now, the algorithm is telling a manager to yell at us. In the future, the algorithm could be telling a shock collar—” I laughed, and he quickly said he was only partly joking. After all, Amazon has patented tracking wristbands that vibrate to direct workers, and Walmart is testing harnesses that monitor the motions of its warehouse staff. Couldn’t you imagine a future where you have the freedom to choose between starving or taking a job in a warehouse, the worker said, and you sign a contract agreeing to wear something like that, and it zaps you when you work too slowly, and it’s all in the name of making you more efficient? “I think that’s a direction it can head, if more people aren’t more conscious, and there isn’t more organization around what’s actually happening to us as workers, and how society is being transformed by this technology,” he said. “Those are the things that keep me up at night, and that I think about when I’m in the warehouse now.”

That worker placed his hopes in unions, and in the burgeoning activism taking place in Amazon warehouses. There’s precedent for this. Workers responded to the acceleration of the last industrial revolution by organizing, and the pace of work became a standard part of union contracts.

The pace of work is only one form of the larger question these technologies will force us to confront: what is the right balance between efficiency and human autonomy? We have unprecedented power to monitor and optimize the conduct of workers in minute detail. Is a marginal increase in productivity worth making innumerable people chronically stressed and constrained to the point they feel like robots?

You could imagine a version of these systems that collects workplace data, but it’s anonymized and aggregated and only used to improve workflows and processes. Such a system would reap some of the efficiencies that make these systems appealing while avoiding the individualized micromanagement workers find galling. Of course, that would mean forgoing potentially valuable data. It would require recognizing that there is sometimes value in not gathering data at all, as a means of preserving space for human autonomy.

The profound difference even a small degree of freedom from optimization can make was driven home when I was talking with a worker who recently quit a Staten Island Amazon warehouse to take a job loading and unloading delivery trucks. He had scanners and metrics there, too, but they only measured whether his team was on track for the day, leaving the workers to figure out their roles and pace. “This is like heaven,” he told his co-workers.